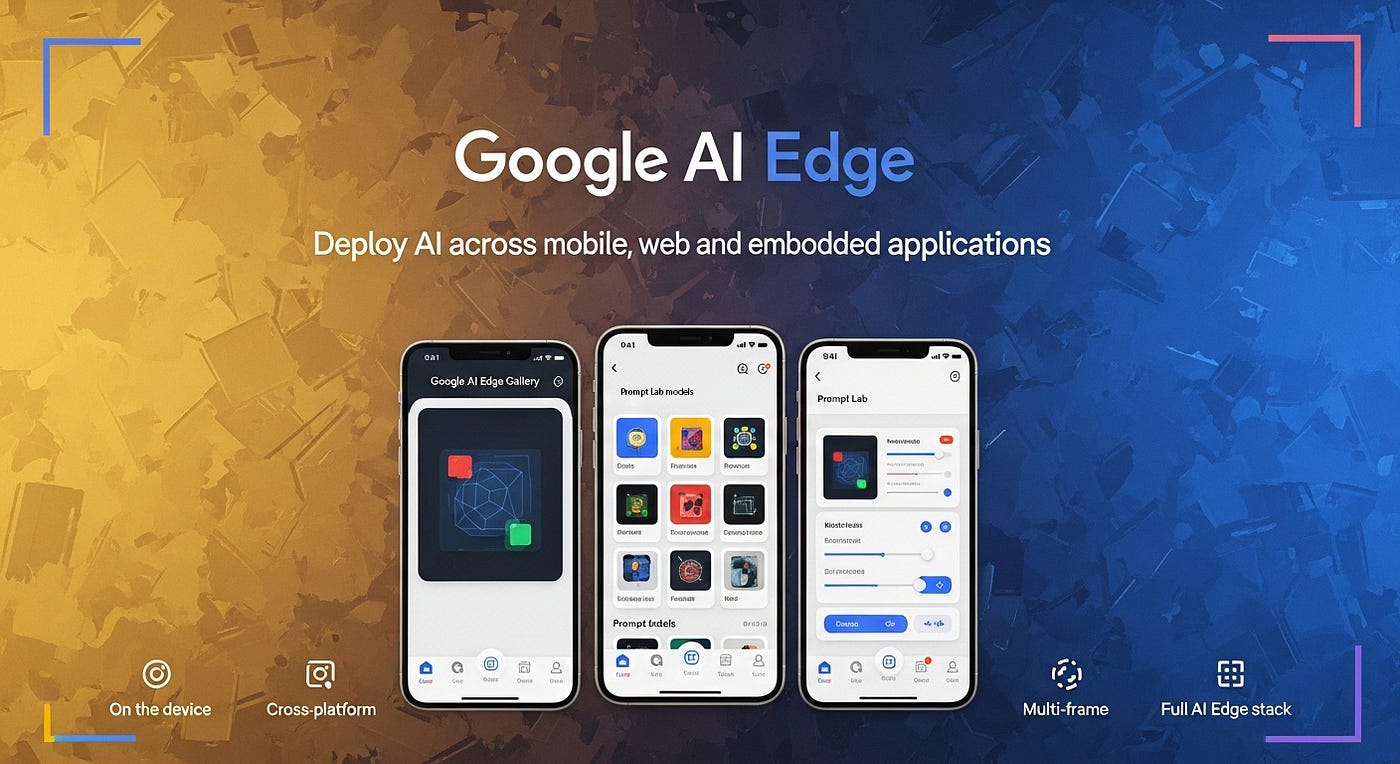

In an important move toward edge computing and privacy-focused AI deployment, Google has quietly released an experimental Android application that lets users run complex AI models directly on their smartphones without the need for an internet connection.

By supporting tasks like image analysis, text production, coding assistance, and multi-turn chats, the AI Edge Gallery app lets users download and run AI models from the well-known Hugging Face platform totally on their devices. All data processing is done locally.

Released under an open-source Apache 2.0 license and accessible via GitHub instead of official app stores, the application is Google’s most recent attempt to address growing privacy concerns regarding cloud-based AI services while democratizing access to advanced AI capabilities.

According to Google’s user manual, “The Google AI Edge Gallery is an experimental app that puts the power of cutting-edge Generative AI models directly into your hands, running entirely on your Android devices.” “Once the model is loaded, explore a world of imaginative and useful AI use cases that all operate locally without requiring an internet connection.”

How Mobile Devices May Achieve Cloud-Level Speed With Google’s Lightweight AI Models?

The program is based on the MediaPipe frameworks and Google’s LiteRT platform, which was formerly known as TensorFlow Lite. These frameworks are designed to execute AI models on mobile devices with limited resources. Models from several machine learning frameworks, such as TensorFlow, PyTorch, Keras, and JAX, are supported by the system.

Google’s Gemma 3 model, a small 529-megabyte language model that can handle up to 2,585 tokens per second during prefill inference on mobile GPUs, is the centerpiece of the offering. For operations like text generation and picture processing, this capability allows for sub-second reaction times, placing the experience on par with cloud-based options.

Three main features are included in the app: Prompt Lab for single-turn activities including content rewriting, code generation, and text summary; Ask Image for visual question-answering; and AI Chat for multi-turn conversations. With real-time benchmarks displaying measures like time-to-first-token and decode speed, users may compare performance and capabilities by switching between different models.

In its technical documentation, Google mentioned optimization techniques that enable larger models to run on mobile devices, stating that “Int4 quantization cuts model size by up to 4x over bf16, reducing memory use and latency.”

The Reason Behind On-Device AI Processing Has The Potential To Transform Company Security And Data Privacy

Growing worries regarding data privacy in AI applications, especially in sectors that handle sensitive data, are addressed by the local processing approach. Organizations can use AI capabilities while adhering to privacy requirements by storing data on-device.

The AI privacy equation has been fundamentally rethought as a result of this change. On-device processing turns privacy into a competitive advantage rather than a limitation that restricts AI possibilities. Businesses may now have both robust AI and data protection, eliminating the need to choose between the two. When network dependencies are removed, key functionality no longer depends on sporadic connectivity, which has historically been a significant barrier for AI applications.

The strategy is especially helpful for industries like healthcare and finance, where adoption of cloud AI is frequently constrained by data sensitivity regulations. The offline capabilities are especially advantageous for field applications like remote work scenarios and equipment diagnostics.

Organizations must, however, handle the increased security issues brought about by the move to on-device processing. The emphasis switches to safeguarding the devices and the AI models they house, even while the data itself is made more secure by never leaving the device. Compared to conventional cloud-based AI installations, this opens up new attack avenues and necessitates distinct security measures. Device fleet management, model integrity verification, and defense against hostile attacks that can jeopardize local AI systems are now important considerations for organizations.

Google’s Platform Approach Challenges The Supremacy Of Qualcomm And Apple In Mobile AI

Google’s action coincides with growing competition in the field of mobile artificial intelligence. Real-time language processing and computational photography are already powered by Apple’s Neural Engine, which is integrated into iPhones, iPads, and Macs. Samsung employs incorporated neural processing units in its Galaxy handsets, while Qualcomm uses its AI Engine, which is integrated into Snapdragon chips, to power voice recognition and smart assistants in Android smartphones.

But by emphasizing platform infrastructure above exclusive features, Google’s strategy is very different from those of its rivals. Google is establishing itself as the foundation layer that makes all mobile AI applications possible rather than directly competing on particular AI skills. This tactic is reminiscent of successful platform plays in the history of technology, when mastering the infrastructure is more beneficial than mastering specific apps.

This platform strategy’s timing is especially astute. The true value will go to whoever can offer the tools, frameworks, and distribution channels that developers require as mobile AI capabilities become more and more standardized. Google maintains control over the underlying infrastructure that drives the entire ecosystem while guaranteeing widespread adoption by making the technology publicly available and open-source.

What Preliminary Testing Indicates About The Present Difficulties And Constraints Of Mobile AI?

Currently, the program has a number of limitations that highlight its experimental character. High-end smartphones, such as the Pixel 8 Pro, can handle larger models with ease, but mid-tier devices may have higher latency. Performance varies greatly depending on the hardware of the device.

Testing showed that some tasks had accuracy problems. On occasion, the app gave inaccurate answers to particular queries, such as misidentifying comic book covers or crew counts for imaginary spacecraft. With the AI itself declaring after testing that it was “still under development and still learning,” Google admits these limitations.

Users must manually install the application using APK files and enable developer mode on Android devices, which makes installation still difficult. The onboarding process is made more difficult by the requirement that users create Hugging Face accounts in order to download models.

A major problem with mobile AI is the conflict between model complexity and device restrictions, which is highlighted by the hardware limitations. Mobile devices must strike a compromise between AI capability and battery life, thermal management, and memory limitations, in contrast to cloud environments where computing resources may be scaled virtually limitless. Instead of just using sheer computing power, this requires developers to become efficiency optimization experts.

Your Pocket Contains The Silent Revolution That Has The Potential To Change The Course Of AI

Google has released more than simply another experimental app with its Edge AI Gallery. The business has launched what may turn out to be the most significant development in artificial intelligence since the advent of cloud computing twenty years ago. Google now wagers that the billions of smartphones people already own will rule the future, despite the fact that tech titans spent years building enormous data centers to support AI services.

The change is more than just a technological one. Google aims to radically alter the way people interact with their personal information. Every week, privacy violations make the news, and authorities around the globe are cracking down on data collecting methods. Google’s move to local processing gives businesses and users a distinct alternative to the internet’s long-standing surveillance-based economic model.

Google carefully considered when to use this tactic. Businesses find it difficult to comply with AI governance regulations, while consumers’ concerns about data privacy are growing. Instead of going up against Qualcomm’s specialized chips or Apple’s tightly integrated hardware, Google sees itself as the cornerstone of a more distributed AI system. The business creates the layer of infrastructure needed to operate the upcoming generation of AI apps on all kinds of devices.

As Google improves the technology, the app’s current issues—difficult installation, sporadic incorrect replies, and inconsistent performance across devices—should go away. Whether Google can handle this shift and maintain its leading position in the AI sector is the more important question.

Google acknowledged that the centralized AI model it assisted in creating would not be sustainable, as evidenced by the Edge AI Gallery. Because it thinks that managing tomorrow’s AI infrastructure is more important than owning today’s data centers, Google makes its tools open-source and makes on-device AI publicly accessible. Every smartphone joins Google’s distributed AI network if the plan is successful. This silent app launch is much more significant than its experimental title implies because of that prospect.