The majority of languages get meaning from sentence form and word placement. For instance, “The box was on the cat” is not the same as “The cat sat on the box.” The syntax of these phrases probably changes during the course of a lengthy work, such as a novel or financial document.

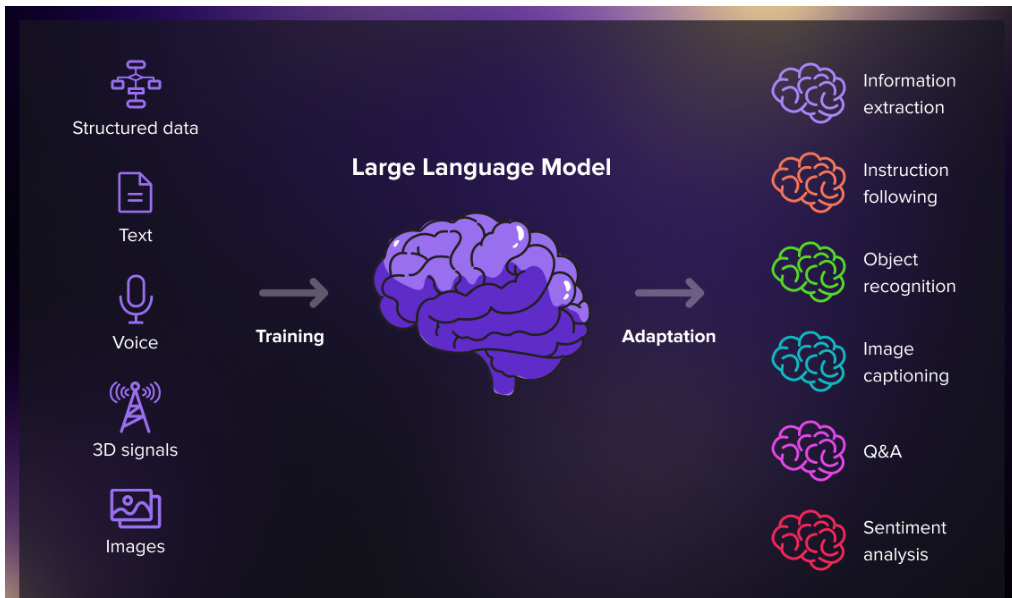

In a similar vein, someone may be following instructions with conditional actions or monitoring variables in a piece of code. We anticipate that the most advanced artificial intelligence systems will be able to perform these kinds of state changes and sequential reasoning, but the current, state-of-the-art attention mechanism within transformers—the main architecture used in large language models (LLMs) to determine the importance of words—has theoretical and empirical limitations when it comes to such capabilities.

Although an attention mechanism can not comprehend word order on its own, it enables an LLM to review previous sections of a query or document and, depending on its training, identify which information and words are most important. Researchers have created methods to encode position information since it “sees” all of the input words, also known as tokens, simultaneously and processes them in the order that they are provided. For highly organized areas like language, this is crucial. However, the most common position-encoding technique, known as rotary position encoding (RoPE), is unaffected by the input data and solely considers the relative distance between tokens in a sequence. Words that are four locations apart, such as “cat” and “box” in the above example, will all receive the same fixed mathematical rotation that is specific to that relative distance, according to this.

An encoding method called “PaTH Attention,” developed by MIT and the MIT-IBM Watson AI Lab, renders positional information context-aware and adaptive instead of static like RoPE.

“Transformers make it possible to model many domains accurately and scalably, but they have limitations when it comes to state tracking, a class of phenomena that is believed to underpin crucial capabilities that we want in our AI systems.” Therefore, the crucial query is: How can we provide state monitoring while preserving the scalability and efficiency of transformers? The senior author of the study, Yoon Kim, is an associate professor in the Department of Electrical Engineering and Computer Science (EECS), a researcher with the MIT-IBM Watson AI Lab, and a member of the Computer Science and Artificial Intelligence Laboratory (CSAIL).

Earlier this month, during the Conference on Neural Information Processing Systems (NeurIPS), a fresh article on this topic was presented. Among Kim’s co-authors are lead author Songlin Yang, a graduate student studying EECS and a former intern of the MIT-IBM Watson AI Lab Summer Program; Stanford University’s Kaiyue Wen; Microsoft’s Liliang Ren; and IBM Research’s Yikang Shen, Shawn Tan, Mayank Mishra, and Rameswar Panda.

The Route To Comprehension

PaTH Attention is flexible, treating the in-between words as a path composed of tiny, data-dependent modifications, as opposed to RoPE’s fixed rotation of each word based on relative distance between syllables. Each transformation functions as a little mirror that changes based on the content of each token it passes. It is based on a mathematical operation known as a Householder reflection. A sequence’s individual steps can affect the model’s subsequent interpretation of the data. The system can predict not just the distance between words but also how their meaning evolves over time thanks to the cumulative effect. This method gives transformers a sense of “positional memory” by enabling them to monitor how entities and relationships change over time. This can be compared to traveling a road while observing your surroundings and the ways they impact you. In order to make the cumulative mathematical transformation from PaTH Attention compatible with quick GPU processing, the team also created a hardware-efficient algorithm to more effectively compute attention scores between each pair of tokens.

The performance of PaTH Attention on synthetic and real-world tasks, such as reasoning, long-context benchmarking, and full LLM training, was then examined by the MIT-IBM researchers to determine whether it enhanced a model’s capacity to track information over time. Despite numerous distracting steps and multi-step recall tests—tasks that are challenging for conventional positional encoding techniques like RoPE—the team assessed its ability to follow the most recent “write” command. Additionally, the researchers taught mid-size LLMs and contrasted them with alternative techniques. On reasoning benchmarks it wasn’t trained on, PaTH Attention outperformed other approaches and increased perplexity. They also used tens of thousands of tokens as inputs to test stability, reasoning, and retrieval. Content-awareness was consistently demonstrated by PaTH Attention.

“We discovered that our new approach was able to outperform existing attention mechanisms, while maintaining their efficiency, on both real-world language modeling tasks and diagnostic tasks designed to test the limitations of transformers,” adds Kim. Additionally, “I’d be interested to see if, in [analyzing] proteins or DNA, these kinds of data-dependent position encodings, like PATH, enhance the performance of transformers on structured domains like biology.”

Thinking More Broadly And Effectively

Researchers next looked into how the PaTH Attention mechanism would function if it more closely resembled human cognition, which is the process by which we disregard irrelevant or outdated information when making decisions. They achieved this by combining PaTH Attention with the Forgetting Transformer (FoX), a different position encoding approach that enables models to “forget” in specific ways. The resulting PaTH-FoX system achieves strong performance across reasoning, long-context understanding, and language modeling benchmarks by adding a method to down-weight information in a data-dependent manner. The expressive potential of transformer architectures is thus expanded by PaTH Attention.

According to Kim, this kind of research is a part of a larger endeavor to create the “next big thing” in artificial intelligence. He notes that the development of “general-purpose building blocks that can be applied to wide domains,” such “convolution layers, RNN [recurrent neural network] layers,” and, most recently, transformers, has been a key factor in both the deep learning and generative AI revolutions. Kim points out that factors like hardware scalability, flexibility, expressivity, and accuracy have been and will continue to be crucial. Developing these new primitives that preserve or enhance expressivity while being scalable is, in his words, “the core enterprise of modern architecture research.”

The MIT-IBM Watson AI Lab and Schmidt Sciences’ AI2050 program provided some funding for this work.